Having explored the physical and psychological mechanisms of control in a previous article, and their deployment through cultural engineering in yet another article, we now turn to their ultimate evolution: the automation of consciousness control through digital systems.

In my research on the tech-industrial complex, I’ve documented how today’s digital giants weren’t simply co-opted by power structures—many were potentially designed from their inception as tools for mass surveillance and social control. From Google’s origins in a DARPA-funded CIA project to Amazon’s founder Jeff Bezos’ familial ties to ARPA, these weren’t just successful startups that later aligned with government interests

Old School Grit: Times...

Best Price: $3.03

Buy New $9.45

(as of 02:57 UTC - Details)

Old School Grit: Times...

Best Price: $3.03

Buy New $9.45

(as of 02:57 UTC - Details)

What Tavistock discovered through years of careful study—emotional resonance trumps facts, peer influence outweighs authority, and indirect manipulation succeeds where direct propaganda fails—now forms the foundational logic of social media algorithms. Facebook’s emotion manipulation study and Netflix’s A/B testing of thumbnails (explored in detail later) exemplify the digital automation of these century-old insights, as AI systems perform billions of real-time experiments, continuously refining the art of influence at an unprecedented scale.

Just as Laurel Cc served as a physical space for steering culture, today’s digital platforms function as virtual laboratories for consciousness control—reaching further and operating with far greater precision. Social media platforms have scaled these principles through ‘influencer’ amplification and engagement metrics. The discovery that indirect influence outperforms direct propaganda now shapes how platforms subtly adjust content visibility. What once required years of meticulous psychological study can now be tested and optimized in real-time, with algorithms leveraging billions of interactions to perfect their methods of influence.

The manipulation of music reflects a broader evolution in cultural control: what began with localized programming, like Laurel Canyon’s experiments in counterculture, has now transitioned into global, algorithmically-driven systems. These digital tools automate the same mechanisms, shaping consciousness on an unprecedented scale

Netflix’s approach parallels Bernays’ manipulation principles in digital form—perhaps unsurprisingly, as co-founder Marc Randolph was Bernays’ great-nephew and Sigmund Freud’s great-grand-nephew. Where Bernays used focus groups to test messaging, Netflix conducts massive A/B testing of thumbnails and titles, showing different images to different users based on their psychological profiles.

Their recommendation algorithm doesn’t just suggest content—it shapes viewing patterns by controlling visibility and context, similar to how Bernays orchestrated comprehensive promotional campaigns that shaped public perception through multiple channels. Just as Bernays understood how to create the perfect environment to sell products—like promoting music rooms in homes to sell pianos—Netflix crafts personalized interfaces that guide viewers toward specific content choices. Their approach to original content production similarly relies on analyzing mass psychological data to craft narratives for specific demographic segments.

More insidiously, Netflix’s content strategy actively shapes social consciousness through selective promotion and burial of content. While films supporting establishment narratives receive prominent placement, documentaries questioning official accounts often find themselves buried in the platform’s least visible categories or excluded from recommendation algorithms entirely. Even successful films like What Is a Woman? faced systematic suppression across multiple platforms, demonstrating how digital gatekeepers can effectively erase challenging perspectives while maintaining the illusion of open access.

I experienced this censorship firsthand. I was fortunate enough to serve as a producer for Anecdotals, directed by Jennifer Sharp, a film documenting Covid-19 vaccine injuries, including her own. YouTube removed it on Day One, claiming individuals couldn’t discuss their own vaccine experiences. Only after Senator Ron Johnson’s intervention was the film reinstated—a telling example of how platform censorship silences personal narratives that challenge official accounts.

This gatekeeping extends across the digital landscape. By controlling which documentaries appear prominently, which foreign films reach American audiences, and which perspectives get highlighted in their original programming, platforms like Netflix act as cultural gatekeepers—just as Bernays managed public perception for his corporate clients. Where earlier systems relied on human gatekeepers to shape culture, streaming platforms use data analytics and recommendation algorithms to automate the steering of consciousness. The platform’s content strategy and promotion systems represent Bernays’ principles of psychological manipulation operating at an unprecedented scale.

Reality TV: Engineering the Self

Before social media turned billions into their own content creators, Reality TV perfected the template for self-commodification. The Kardashians exemplified this transition: transforming from reality TV stars into digital-age influencers, they showed how to convert personal authenticity into a marketable brand. Their show didn’t just reshape societal norms around wealth and consumption—it provided a masterclass in abandoning genuine human experience for carefully curated performance. Audiences learned that being oneself was less valuable than becoming a brand, that authentic moments mattered less than engineered content, and that real relationships were secondary to networked influence.

This transformation from person to persona would reach its apex with social media, where billions now willingly participate in their own behavioral modification. Users learn to suppress authentic expression in favor of algorithmic rewards, to filter genuine experience through the lens of potential content, and to value themselves not by internal measures but through metrics of likes and shares. What Reality TV pioneered—the voluntary surrender of privacy, the replacement of authentic self with marketable image, the transformation of life into content—social media would democratize at a global scale. Now anyone could become their own reality show, trading authenticity for engagement.

Instagram epitomizes this transformation, training users to view their lives as content to be curated, their experiences as photo opportunities, and their memories as stories to be shared with the public. The platform’s ‘influencer’ economy turns authentic moments into marketing opportunities, teaching users to modify their actual behavior—where they go, what they eat, how they dress—to create content that algorithms will reward. This isn’t just sharing life online—it’s reshaping life itself to serve the digital marketplace.

Even as these systems grow more pervasive, their limits are becoming increasingly visible. The same tools that enable manipulating cultural currents also reveal its fragility, as audiences begin to challenge manipulative narratives.

Cracks in the System

Despite its sophistication, the system of control is beginning to show cracks. Increasingly, the public is pushing back against blatant attempts at cultural engineering, as evidenced by current consumer and electoral rejections.

Recent attempts at obvious cultural exploitation, such as corporate marketing campaigns and celebrity-driven narratives, have begun to fail, signaling a turning point in public tolerance for manipulation. When Bud Light and Target—companies with their own deep establishment connections—faced massive consumer backlash in 2023 over their social messaging campaigns, the speed and scale of the rejection marked a significant shift in consumer behavior. Major investment firms like BlackRock faced unprecedented pushback against ESG initiatives, seeing significant outflows that forced them to recalibrate their approach. Even celebrity influence lost its power to shape public opinion—when dozens of A-list celebrities united behind one candidate in the 2024 election, their coordinated endorsements not only failed to sway voters but may have backfired, suggesting a growing public fatigue with manufactured consensus.

The public is increasingly recognizing these manipulation patterns. When viral videos expose dozens of news anchors reading identical scripts about ‘threats to our democracy,’ the facade of independent journalism crumbles, revealing the continued operation of systematic narrative control. Legacy media’s authority is crumbling, with frequent exposures of staged narratives and misrepresented sources revealing the persistence of centralized messaging systems.

Even the fact-checking industry, designed to bolster official narratives, faces growing skepticism as people discover that these ‘independent’ arbiters of truth are often funded by the very power structures they claim to monitor. The supposed guardians of truth serve instead as enforcers of acceptable thought, their funding trails leading directly to the organizations they’re meant to oversee.

The public awakening extends beyond corporate messaging to a broader realization that supposedly organic social changes are often engineered. For example, while most people only became aware of the Tavistock Institute through recent controversies about gender-affirming care, their reaction hints at a deeper realization: that cultural shifts long accepted as natural evolution might instead have institutional authors. Though few still understand Tavistock’s historic role in shaping culture since our grandparents’ time, a growing number of people are questioning whether seemingly spontaneous social transformations may have been, in fact, deliberately orchestrated.

Liberty and Property

Best Price: $1.60

Buy New $4.99

(as of 04:01 UTC - Details)

Liberty and Property

Best Price: $1.60

Buy New $4.99

(as of 04:01 UTC - Details)

This growing recognition signals a fundamental shift: as audiences become more conscious of manipulation methods, the effectiveness of these control systems begins to diminish. Yet the system is designed to provoke intense emotional responses—the more outrageous the better—precisely to prevent critical analysis. By keeping the public in a constant state of reactionary outrage, whether defending or attacking figures like Trump or Musk, it successfully distracts from examining the underlying power structures these figures operate within. The heightened emotional state serves as a perfect shield against rational inquiry.

Before examining today’s digital control mechanisms in detail, the evolution from Edison’s hardware monopolies to Tavistock’s psychological operations to today’s algorithmic control systems reveals more than a natural historical progression—it shows how each stage intentionally built upon the last to achieve the same goal. Physical control of media distribution evolved into psychological manipulation of content, which has now been automated through digital systems. As AI systems become more sophisticated, they don’t just automate these control mechanisms—they perfect them, learning and adapting in real time across billions of interactions.

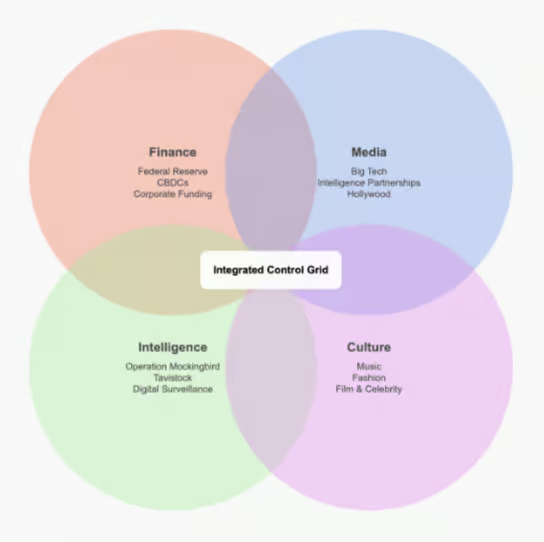

We can visualize how distinct domains of power—finance, media, intelligence, and culture—have converged into an integrated grid of social control. While these systems initially operated independently, they now function as a unified network, each reinforcing and amplifying the others. This framework, refined over a century, reaches its ultimate expression in the digital age, where algorithms automate what once required elaborate coordination between human authorities.